The artificial intelligence (AI) revolution is in full swing, and according to Nvidia CEO Jensen Huang, this transformative industry is just getting started. Speaking at the World Economic Forum in Davos, Huang emphasized that AI requires trillions of dollars in infrastructure investment to reach its full potential and avoid collapsing under its own weight.

The Largest Infrastructure Buildout in Human History

During his discussion with BlackRock’s Larry Fink, Huang likened AI development to a “five-layer cake,” with energy forming its foundation, followed by chips, cloud infrastructure, AI models, and applications at the top. Each layer requires an immense buildout before the industry can thrive properly.

“We’re now a few hundred billion dollars into it,” Huang explained. “There are trillions of dollars of infrastructure that needs to be built out.” He defended the investments as necessary, calling AI development the largest infrastructure buildout in human history—a statement aligned with recent funding trends. In 2025 alone, $1.5 trillion was dedicated to AI development, according to Gartner.

Is AI Overheating?

Despite concerns of an AI bubble, Huang maintains that this level of spending is crucial for fostering global economic growth. However, critics like Jamie Dimon from JPMorgan remain cautious, suggesting that while AI holds promise, not all investments will yield returns. For instance, an MIT study revealed that 95% of companies investing billions into generative AI technologies are not experiencing measurable ROI.

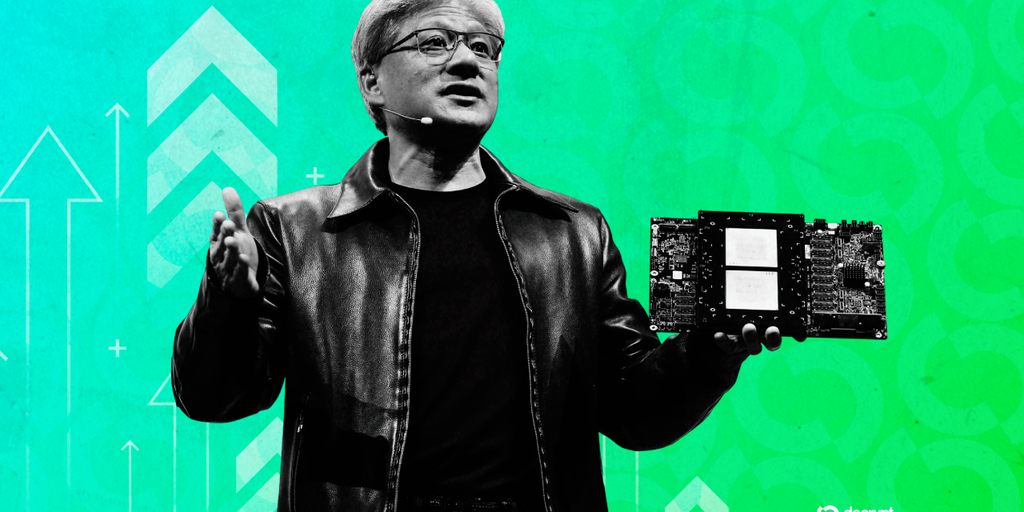

Some allege a circular flow of financing in the AI industry as well. Nvidia, for example, recently committed $100 billion to OpenAI, which in turn spends a significant portion of that on Nvidia chips—a relationship critics describe as inflating demand artificially.

The Race for AI Hardware Dominance

Nvidia may currently lead the AI hardware market, but competitors are carving out significant inroads. Startups like Cerebras, with promises of faster AI inference speeds, have attracted investments. Major players like AMD, Broadcom, and Google’s own Tensor Processing Units (TPUs) are also positioning themselves as viable alternatives to Nvidia’s dominant GPU systems. Even Meta is reportedly exploring partnerships with Google for its data centers.

Powering the AI Revolution

Huang’s key message at Davos was clear: To sustain the ongoing AI boom, the world needs more energy, more chips, and more data centers. He suggested that current investment levels might not even be enough to meet global demand. “The opportunity is really quite extraordinary,” he concluded.

Whether that opportunity materializes or falters depends on how companies and governments navigate this uncharted territory. For those looking to support their innovation efforts, high-performance systems like the Nvidia H100 Tensor Core GPU provide cutting-edge processing power tailored for AI workloads.

Stay on top of industry trends by subscribing to our newsletter for weekly insights, tips, and news shaping the future of AI and other technologies.